(This article was translated by AI and then reviewed by a human.)

Preface

I recently found out that GitHub offers free access to these models, including GPT-4o, Llama-3.3, Phi-3.5… etc., and they even provide APIs for connecting programs!

Although GitHub Models is mainly for us to test when developing generative AI applications. It is considered a trial nature, so there are speed and token quantity restrictions. Still, they’re great for personal projects. The daily request limits aren’t too low either (ranging from 50 to 150 requests, depending on the model). If you’re interested, give them a try~

If you find that access isn't available yet, you can join the waitlist: https://github.com/marketplace/models/waitlist

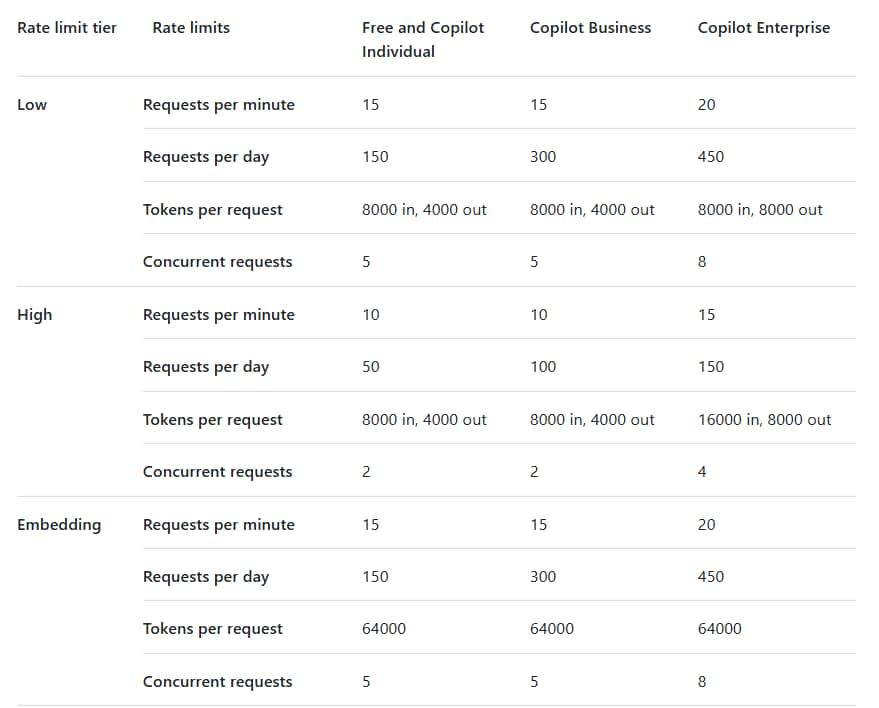

Rate Limits

Before you start using them, let's check the rate limits.

GitHub Models categorize rate limits into levels like Low, High, and Embedding.

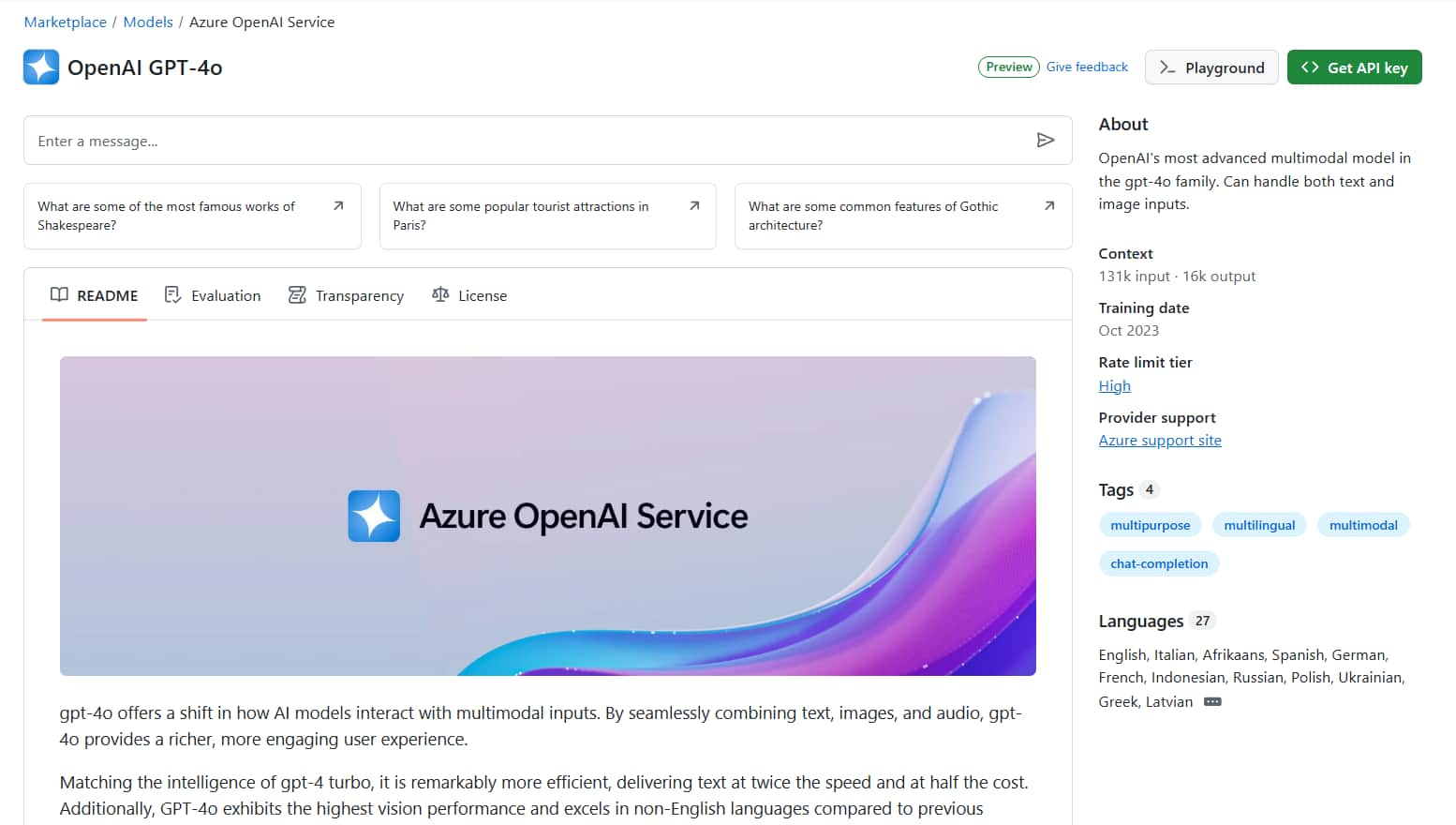

You can find each model’s rate limit level (“Rate limit tier”) on the model introduction and Playground pages.

* There are other options like Azure OpenAI o1-preview and Azure OpenAI o1-mini listed at the bottom of the table, but I haven’t been able to use them yet.

For example, the rate limits for GPT-4o (classified as "High") are:

Requests per minute: 10

Requests per day: 50

Tokens per request: 8000 in, 4000 out

Concurrent requests: 2

* If you"re a Copilot Business or Copilot Enterprise user, you'll have higher usage limits.

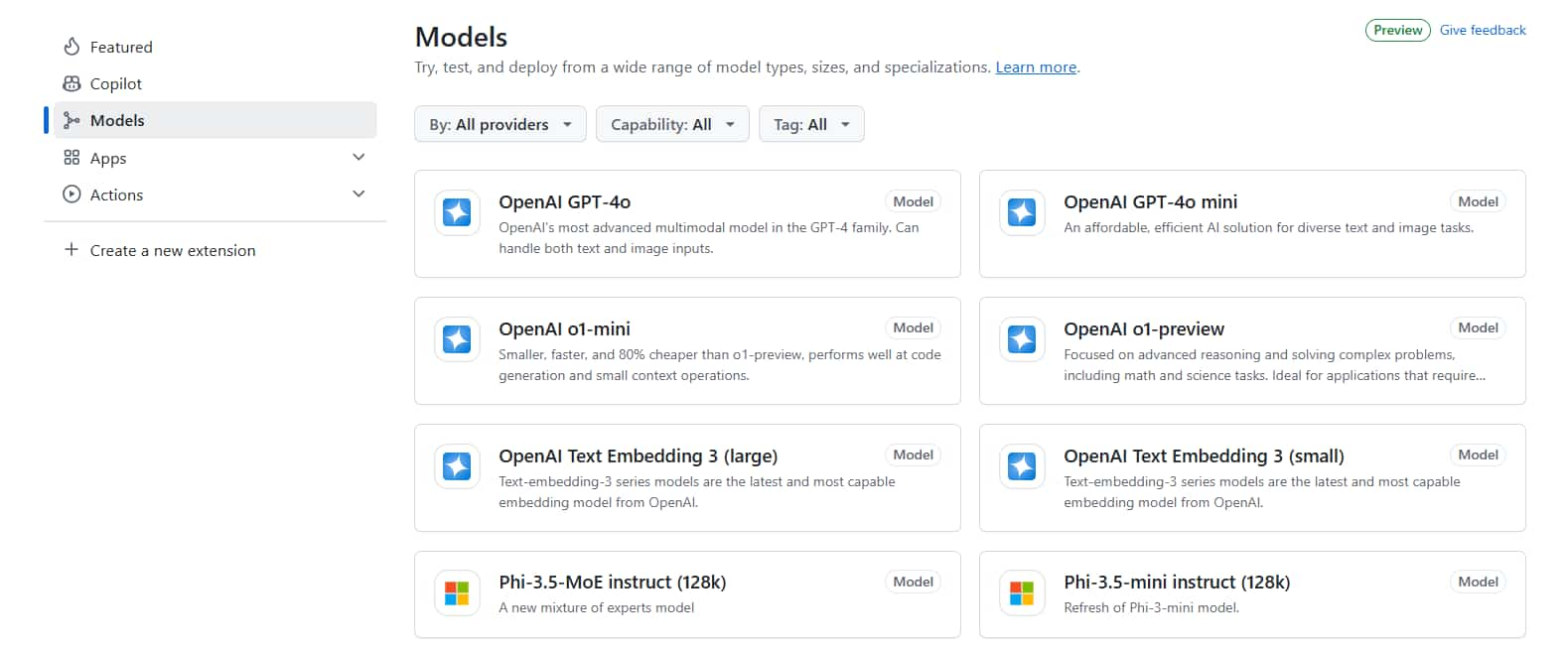

Models List

Which models does GitHub Models offer?

Here's a complete list of supported models:https://github.com/marketplace/models/catalog

For example, chat models include:

- OpenAI GPT-4o

- OpenAI GPT-4o mini

- DeepSeek-V3-0324

- DeepSeek-R1

- Llama 4 Maverick 17B 128E Instruct FP8

- Llama-3.3-70B-Instruct

- Llama-3.2-90B-Vision-Instruct

- Phi-4

- Phi-3.5-MoE instruct

- Phi-3.5-vision instruct

- Mistral Large

- Mistral Small 3.1

- Codestral 25.01

- Cohere Command R+

- AI21 Jamba 1.5

- JAIS 30b Chat

- …(more)

And Embedding Models:

- OpenAI Text Embedding 3

- Cohere Embed v3 Multilingual

- …(more)

When you click on any model, it opens a detailed introduction page.

There will be relevant descriptions of the model, test evaluation scores, License, etc.

The right block also includes introduction, Context (model "itself" input and output tokens restrictions), training data date, rate limit level (Rate limit tier), providers, supported languages and other information.

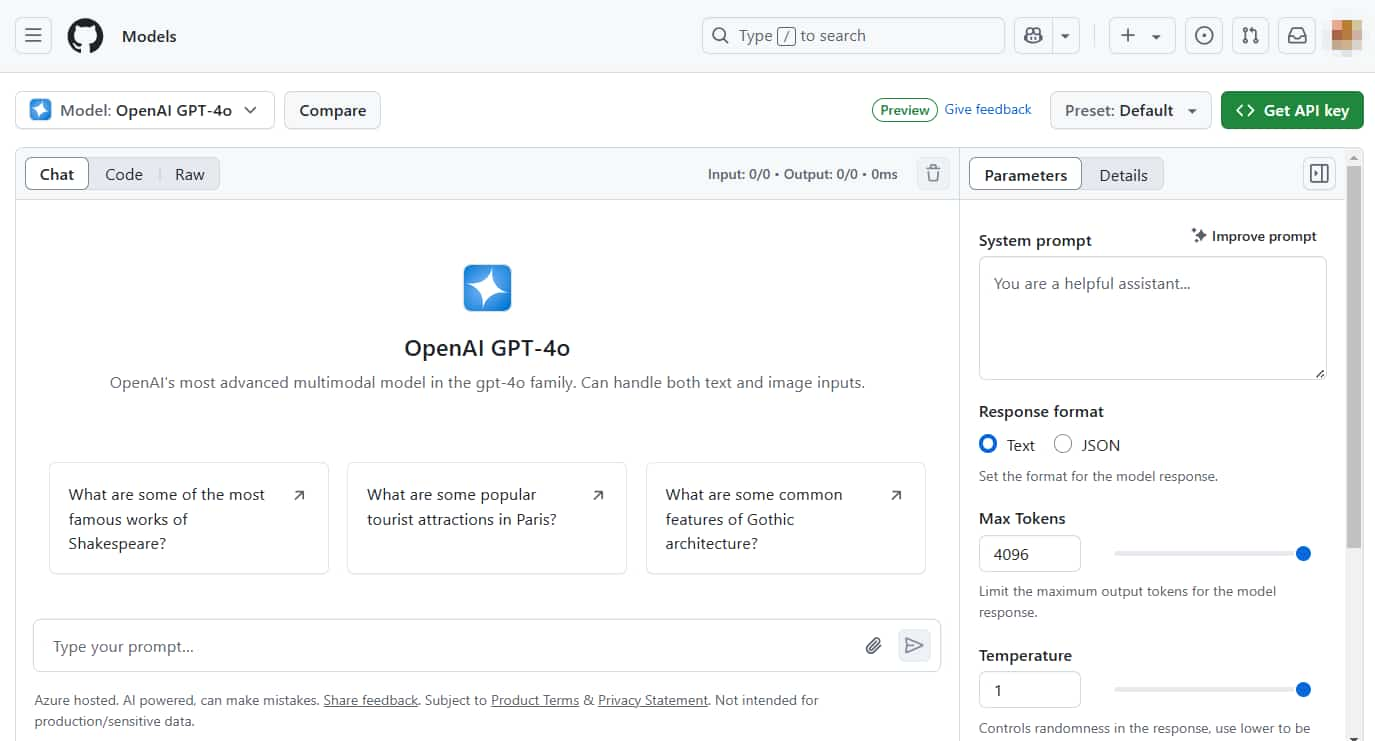

Playground

From the model introduction page, click "Playground"” in the top-right corner, or select a model from the GitHub Marketplace

.

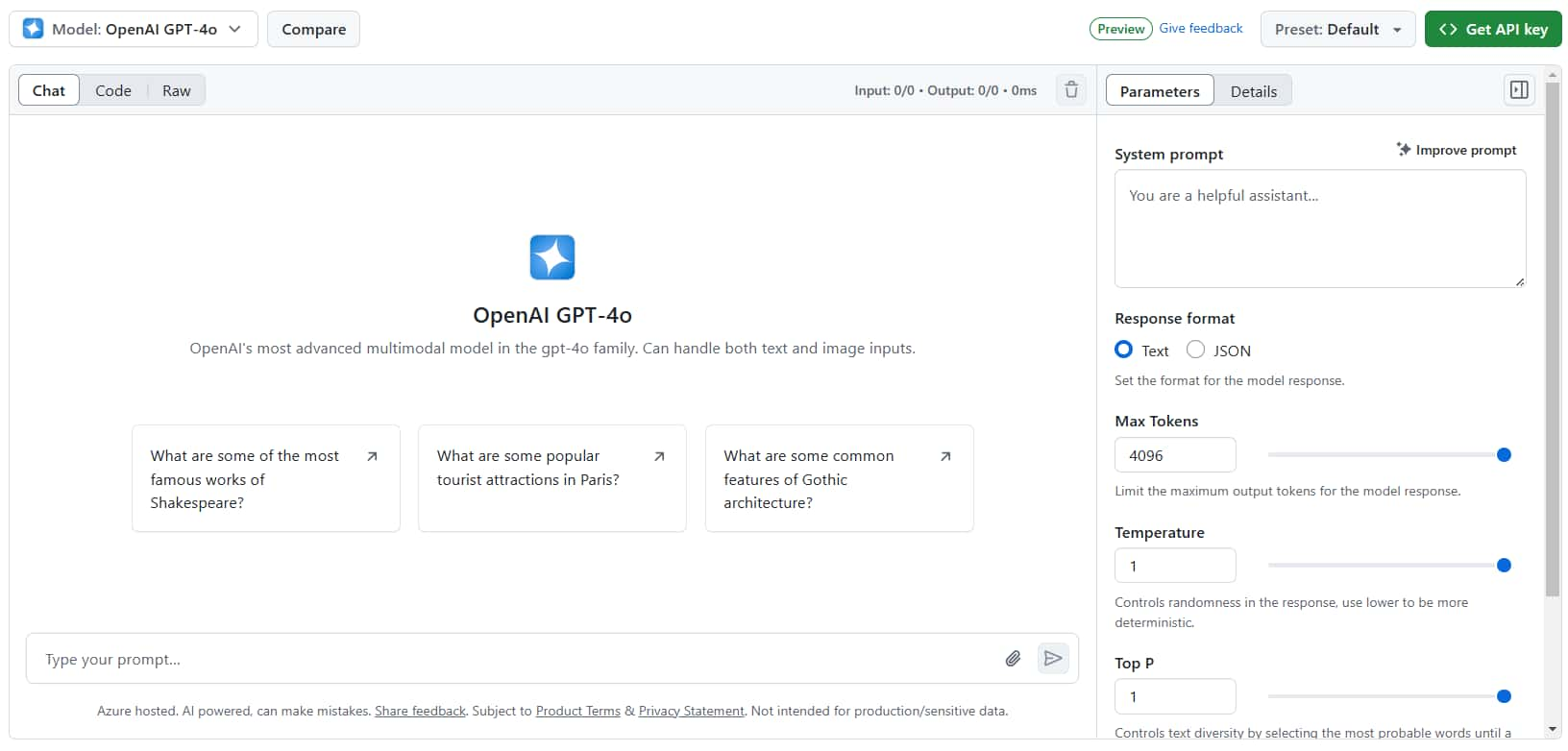

You'll then enter the Playground page, where you can interact with the model directly.

* For example, the Playground of GPT-4o

First, look at the right side where you can switch between "Parameters" and "Details":

- Parameters: Set and adjust model parameters (System prompt, Response format, Max Tokens, Temperature…).

- Details: Information about the model (similar to the model introduction page).

Next, the left side is divided into "Chat", "Code", and "Raw":

- Chat: Like using ChatGPT or Gemini, you can chat with the model in multiple rounds.

- Code: Shows how to use the API with examples in different programming languages. The next section API will explain more.

- Raw: The raw data of your conversation with the model.

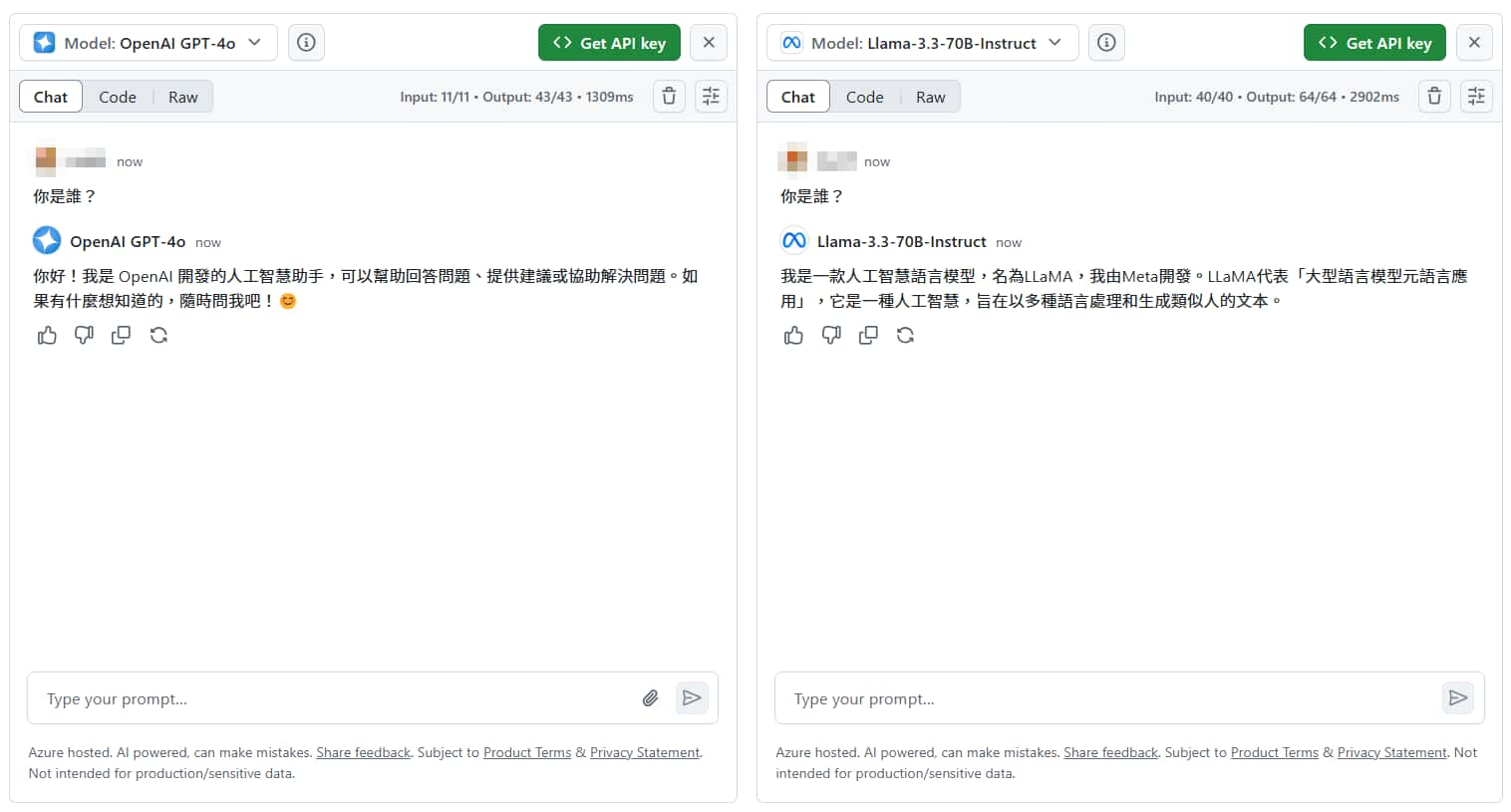

Also, in the top left of the page, you can see the "Compare" button, which is used to compare the responses of two different models. After you enter a question (prompt), it will send it to both models at the same time, making it easy to compare which model gives a better answer.

If you want to save (or even share) the currently adjusted parameters and chat history, you can use the "Preset" function in the upper right corner.

API

As mentioned in the title, besides using the Playground interface on the webpage, GitHub Models also provides an API for us to integrate into our programs for testing.

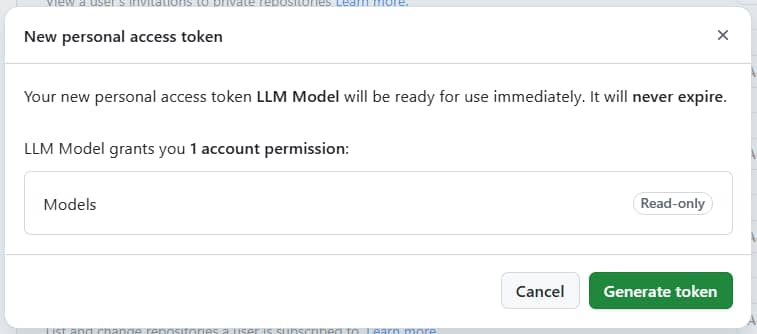

Create GitHub Token

Before starting to use the API, we need to create a Token on GitHub for authentication.

* For information about GitHub Tokens, you can refer to this official document: Managing your personal access tokens

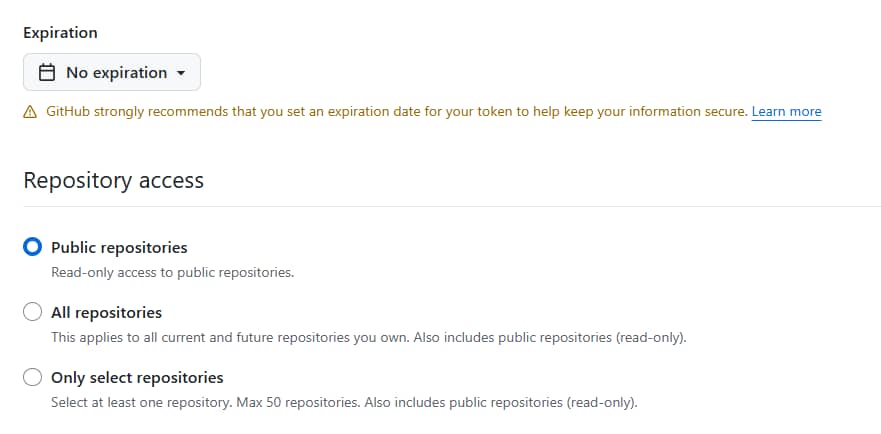

Settings > Bottom left corner Developer settings > Personal access tokens > Fine-grained tokens

Click "Generate new token".

You can change Expiration to “No expiration” (no time limit).

For the Repository access section, just keep it as “Public Repositories”.

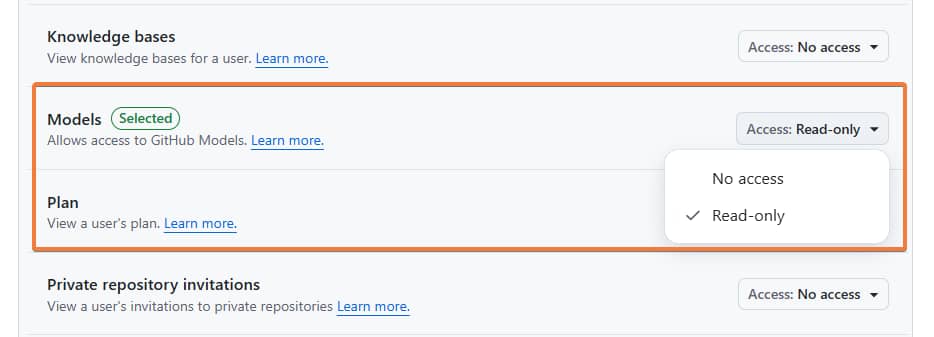

Important:

Go to Permissions > Account permissions > Models, and change it to “Read-only”.

This is needed to have access to GitHub Models.

After filling in the fields, click the "Generate token" button at the bottom.

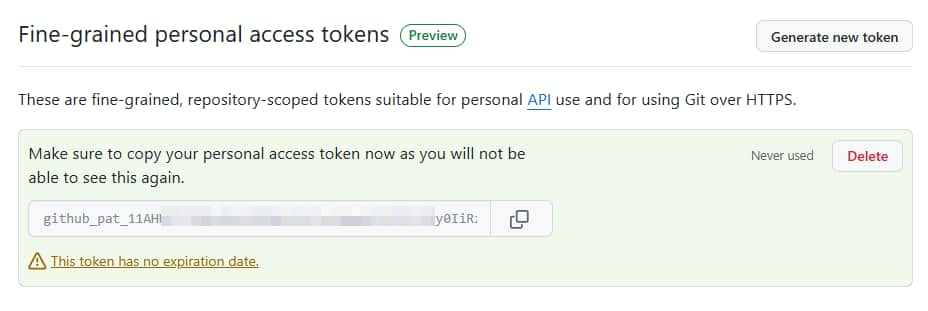

Copy and save the token carefully. If you forget it later, you will need to generate a new one.

Fine-grained personal access token will look something like this: github_pat_11AHxxxxxxxxxxxxxxxxxxxxxxxxxCp7pLSr3a

Usage Example

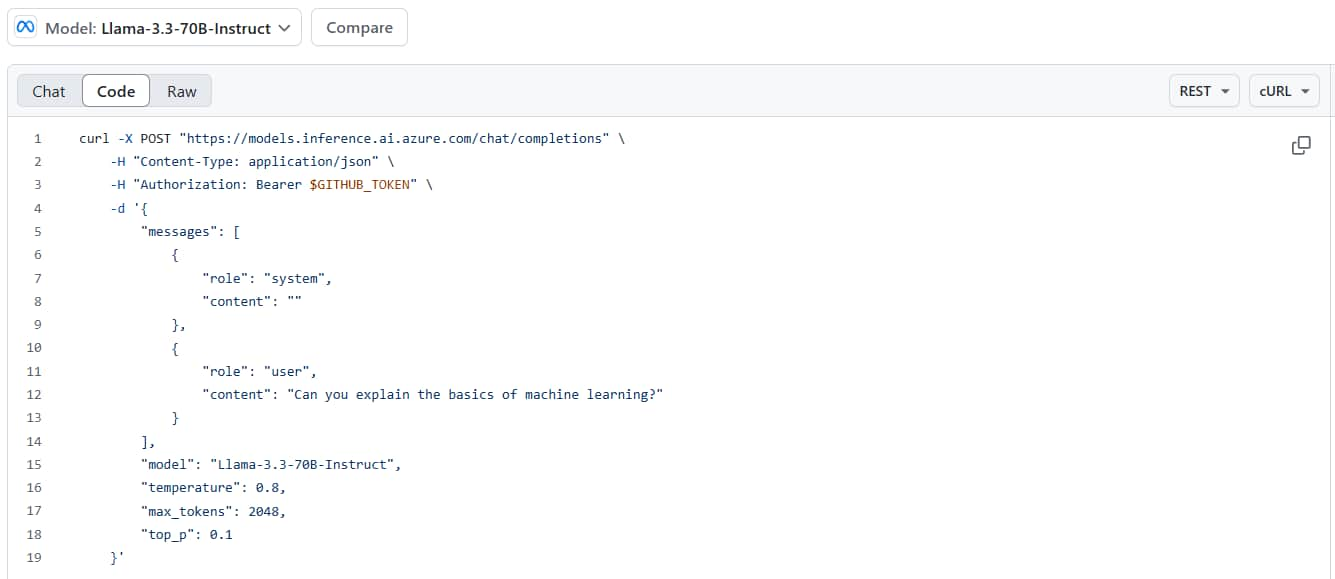

Go back to the "Code" tab on the Playground page (such as GPT-4o's Playground ).

In the top-right corner, you can use a dropdown to choose different programming languages or SDKs (OpenAI SDK or Azure AI Inference SDK).

Switch to the REST language, where you can see the actual request URL, Headers, Body, etc. The API format is the same as the OpenAI API. So, if the package, framework, or software you use supports the OpenAI API format, you can switch to using this directly. After testing GitHub Models, switching to a stable paid version like Azure OpenAI or OpenAI is also simple.

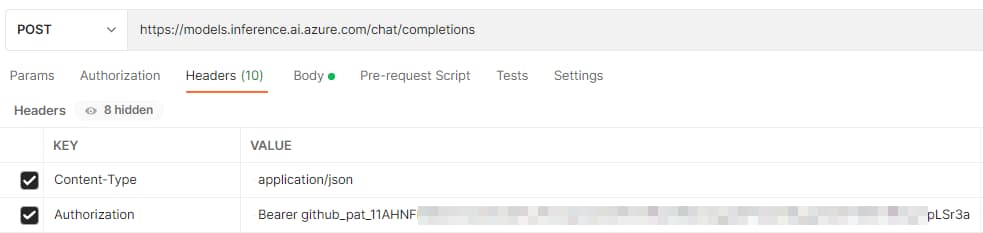

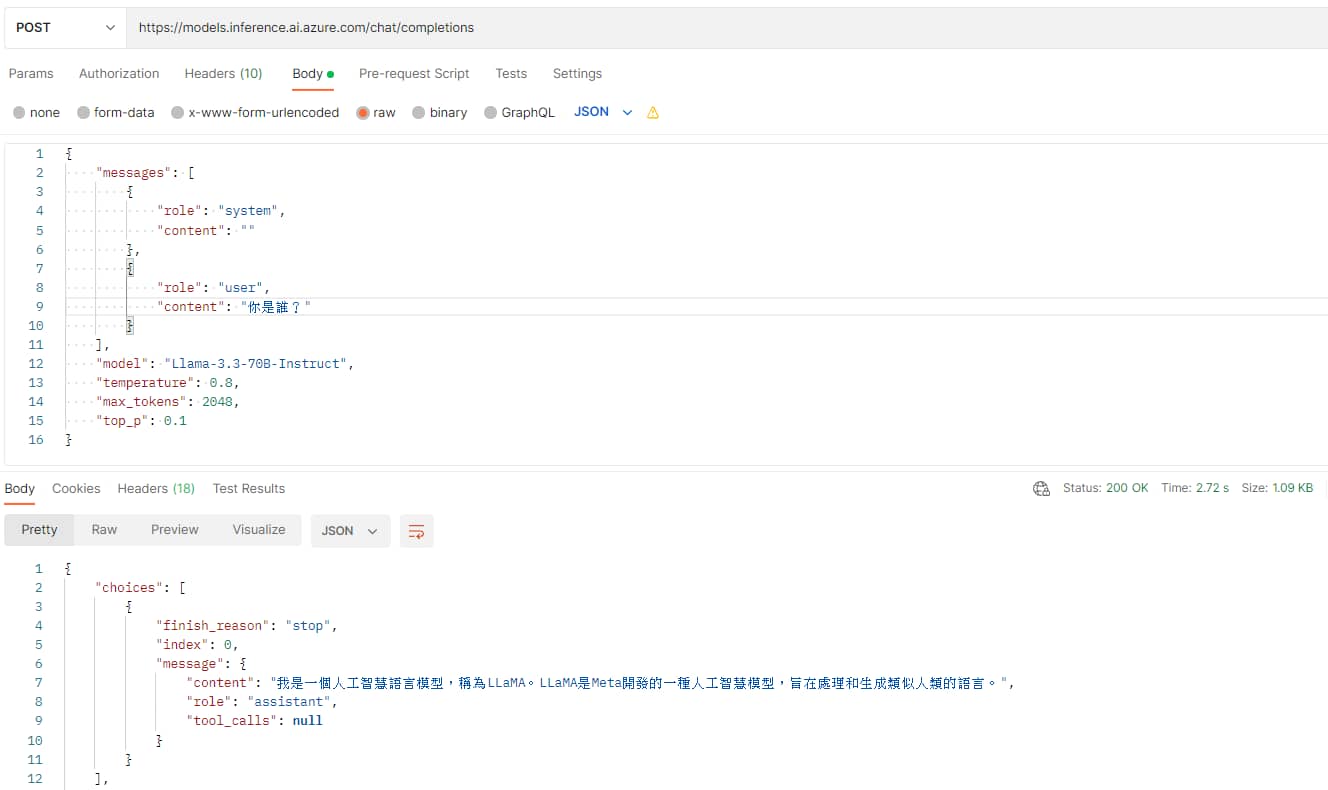

Here, I use Postman to show how to send a request.

Request URL: https://models.inference.ai.azure.com/chat/completions

Request Method: POST

Request Headers:

Content-Type: application/json

Authorization: Bearer github_pat_11AHxxxxxxxxxxxxxxxxxxxxxxxxxCp7pLSr3a

* The string after "Bearer" in the "Authorization" header is the "personal access token" you just created.

Body (in JSON format):

| |

* Only the messages and model fields are necessary.

Sample response data:

| |

* However, I suspect it might include other prompts because the usage > prompt_tokens seems significantly larger than the prompt tokens I sent. If anyone knows, please leave a comment to help me understand~🙏

Conclusion

If you are developing an LLM application project or have low usage, it is recommended to try GitHub Models.

If you're interested in Generative AI, make sure to follow the “ IT Space ” Facebook page to stay updated on the latest posts! 🔔

References:

GitHub Marketplace Models

GitHub Models documentation

我不是最好的那個,但我想成為最努力的那個。

—— 李洋 (台灣羽球國手)

🔻 如果覺得喜歡,歡迎在下方獎勵我 5 個讚~